Elon Musk, including a group of 1,100 prominent tech figures has called on companies to stop their AI development. The list which includes Apple’s cofounder Steve Wozniak and other leading artificial intelligence researchers has signed an open letter seeking a six-month halt for AI development.

The other signatory is Emad Mostaque, founder/CEO of Stability AI which helped form the Stable Diffusion text-to-image generation model. Connor Leahy, the CEO of Conjecture, Evan Sharp, Pinterest co-founder, and Chris Larson, cofounder of cryptocurrency company Ripple, have also signed the moratorium.

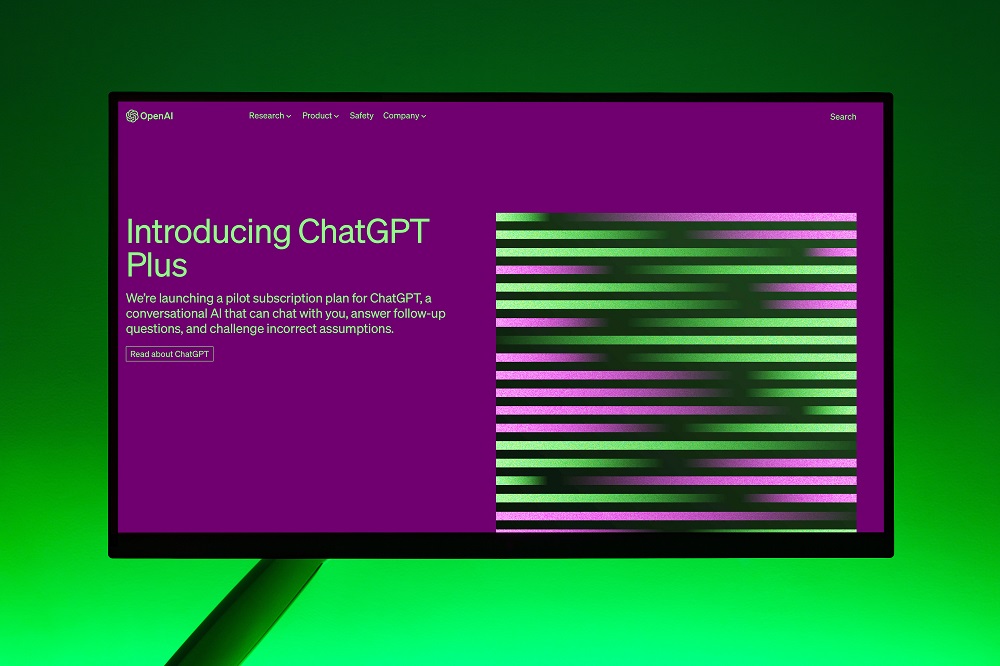

The figures have demanded tech companies to immediately stop enhancing AI systems “more powerful than GPT-4“. It’s an advanced AI language model that succeeds GPT-3.5 which powered the ChatGPT chatbot. Ever since its inception, the viral AI chatbot has disrupted the tech world with its supreme ability for in-depth responses to user queries.

Elon Musk wants powerful AI development activities to stop

SpaceX head Musk has been open about his concerns with AI. Lately, he drew a rift with OpenAI, the company behind ChatGPT after he criticized the latter for its prowess and collaboration with Microsoft. Google has also launched its similar AI chatbot Bard in response. It is also preparing many AI tools slated for launch later. Interestingly though, Musk was an original cofounder of the company before leaving it in 2018.

OpenAI’s early success has created an uproar in the tech industry and drawn attention to its sinister capabilities. As a result, many developers and researchers have felt alarmed by what future development could transpire.

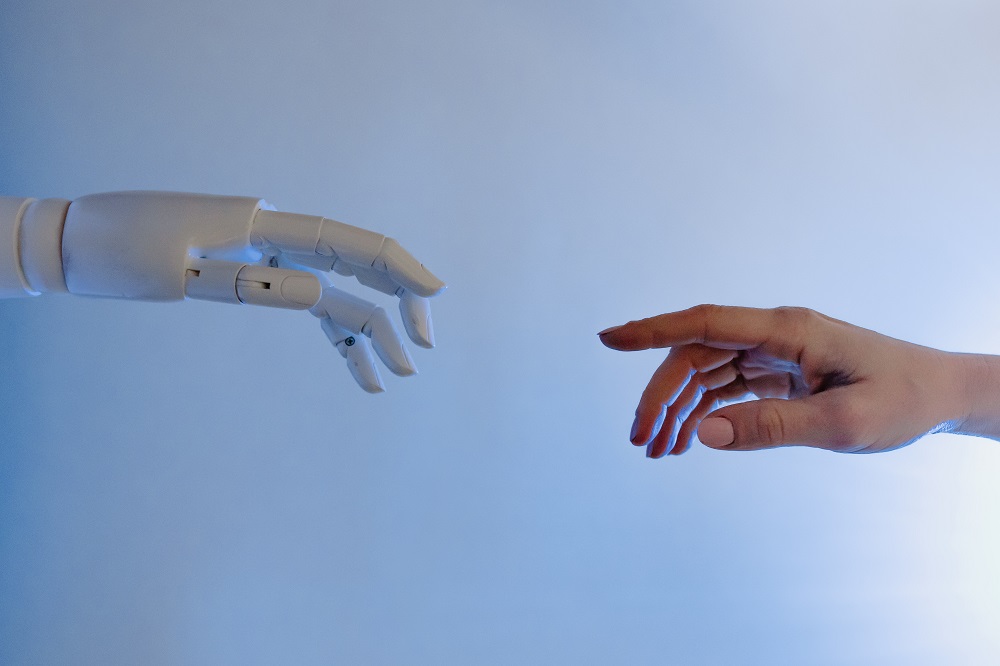

The potential of these AI systems can replace human hands for tasks such as writing essays to perform even highly complicated works such as coding has deeply concerned a large section of the tech fraternity. Some believe further AI race could risk exceeding human intelligence with undeniably dangerous consequences.

The letter expresses concerns that AI technologies such as GPT-4 are now “becoming human-competitive at general tasks”. So, they could be used to spread misinformation on a wide scale and involve the mass automation of jobs. Besides, it also shows a concern about such advanced tools’ path to superintelligence that could potentially pose a greater risk to human civilization. It warns that powerful A.I. technologies must only “be developed once we are confident that their effects will be positive and their risks will be manageable.”

Also read: Samsung Offers Software to Turn Galaxy Devices Into IoT

Stop training the AI!

In the letter, the signatories call for AI labs to stop training AI systems for at least 6 months. They further assert that governments must intervene and institute a moratorium if companies fail to comply. It says, that during this time, the governments must “dramatically accelerate the development of robust A.I. governance systems”.

The letter doesn’t seek a complete halt to AI growth but wants more powerful systems’ development to pause. “AI research and development should be refocused on making today’s powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal,” the letter says.

During the six-month pause, companies enhancing AI should develop shared safety protocols for AI design that external experts will independently audit.

Till now, OpenAI nor any technology companies that are reinforcing these powerful AI models have made any response to the open letter.

Do you believe that AI has reached a point where the AI vs humans debate has gained real substance? Do share your insights in the comments below.